French AI startup Mistral, often dismissed as the European underdog in a field dominated by American giants and Chinese upstarts, just caught up: It dropped its most ambitious release yet on Tuesday that gives the open-source competition a run for the money. (Or no money, in this case.)

The 4-model family spans pocket-sized assistants to a 675 billion parameter state of the art system, all under the permissive Apache 2.0 open-source license. The modes are publicly available for download—anyone with the proper hardware can run them locally, modify them, fine-tune them, or build applications on top.

The flagship company, Mistral Large 3, uses a sparse Mixture-of-Experts architecture that activates only 41 billion of its 675 billion total parameters per token. That engineering choice lets it punch at frontier weight classes while running inference at something closer to a 40 billion parameter compute profile.

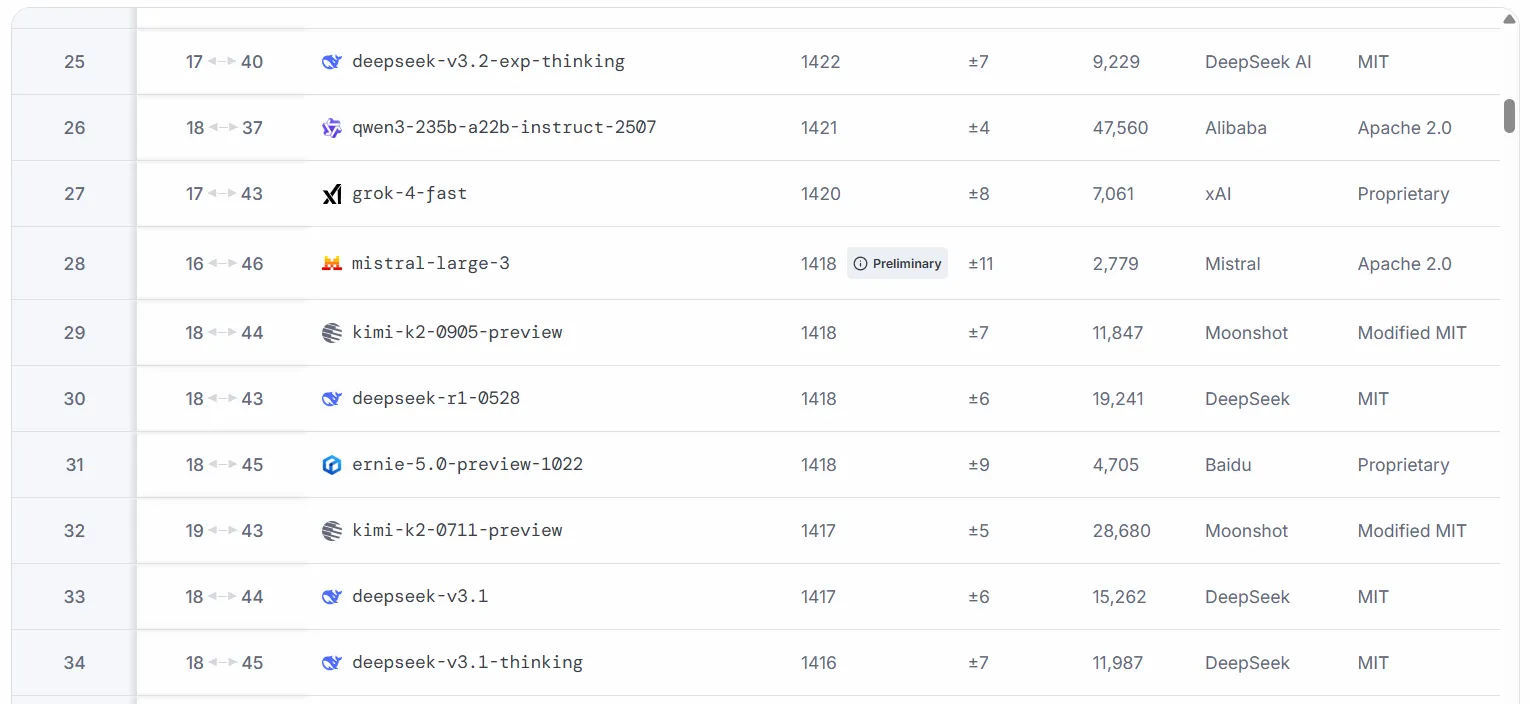

Mistral Large 3 was trained from scratch on 3,000 NVIDIA H200 GPUs and debuted at number two among open-source, non-reasoning models on the LMArena leaderboard.

The benchmark rivalry with DeepSeek tells a complicated story. According to Mistral’s benchmarks, its best model beats DeepSeek V3.1 on several metrics but trails the newer V3.2 by a handful of points on LMArena.

On general knowledge and expert reasoning tasks, the Mistral family holds its own. Where DeepSeek edges ahead is raw coding speed and mathematical logic. But that’s to be expected: This release does not include reasoning models, so these models have no chain of thought embedded into their architecture.

The smaller “Ministral” models are where things get interesting for developers. Three sizes—3B, 8B, and 14B parameters—each ship with base and instruct variants. All support vision input natively. The 3B model caught the attention of AI researcher Simon Willison, who noted it can run entirely in a browser via WebGPU.

If you want to try that one, this Hugginface space lets you load it locally and interact using your webcam as input.

A competent vision-capable AI in a roughly 3GB file opens up possibilities for developers in need of efficiency—or even hobbyists: drones, robots, laptops running offline, embedded systems in vehicles, etc.

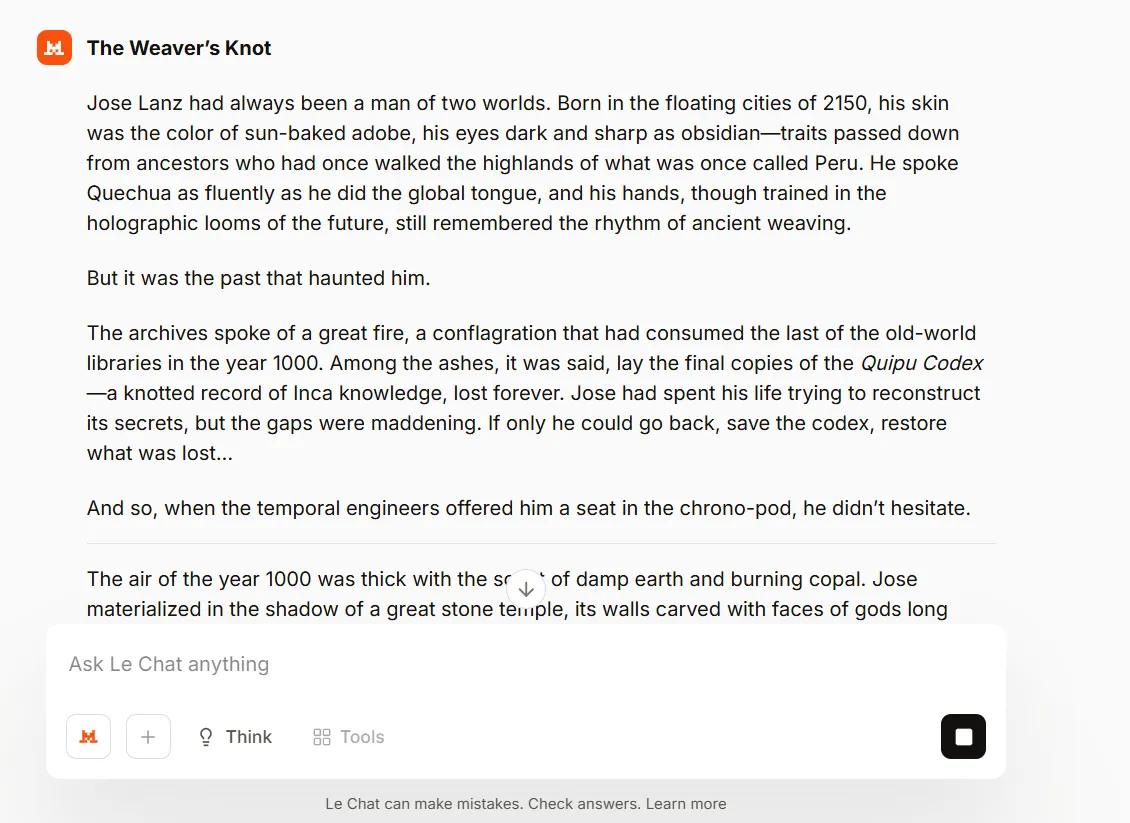

Early testing reveals a split personality across the lineup. In a quick test, we found the Mistral 3 Large good for conversational fluency. Sometimes it has the formatting style of GPT-5 (a similar language style and preference for emojis) but with a more natural cadence.

Mistral 3 Large is also pretty loose in terms of censorship, making it the better option for quick role play when choosing between ChatGPT, Claude, or Gemini.

For natural language tasks, creative writing, and role play, users find the 14B instruct variant pretty good, but not particularly great. Reddit threads on r/LocalLLaMA flag repetition issues and occasional overreliance on stock phrases inherited from training data, but the model’s ability to generate long form content is a nice plus, especially for its size.

Developers running local inference report that the 3B and 8B models sometimes loop or produce formulaic outputs, particularly on creative tasks.

That said, the 3B model is so small it can run on weak hardware such as smartphones and can be trained/fine-tuned for specific purposes. The only competing option right now in that specific area is the smallest version of Google’s Gemma 3.

Enterprise adoption is already moving. HSBC announced a multi-year partnership on Monday with Mistral to deploy generative AI across its operations. The bank will run self-hosted models on its own infrastructure, combining internal technical capabilities with Mistral expertise. For financial institutions handling sensitive customer data under GDPR, the appeal of an EU-headquartered AI vendor with open weights is not subtle.

Mistral and NVIDIA collaborated on an NVFP4 compressed checkpoint that allows Large 3 to run on a single node of eight of its best cards. NVIDIA claims the Ministral 3B hits roughly 385 tokens per second on an RTX 5090, with over 50 tokens per second on Jetson Thor for robotics applications. That means the model is very efficient and fast at inference, giving faster answers without sacrificing quality.

A reasoning-optimized version of Large 3 is coming soon, according to the announcement. Until then, DeepSeek R1 and other Chinese models like GLM or Qwen Thinking retain some differentiation on explicit reasoning tasks. But for enterprises that want frontier capability, open weights, multilingual strength across European languages, and a company that will not be subject to Chinese or American national security laws, the options just expanded from zero to one.

Generally Intelligent Newsletter

A weekly AI journey narrated by Gen, a generative AI model.